Building an LLM Chat & Task Bot with Durable Execution

Stephan Ewen

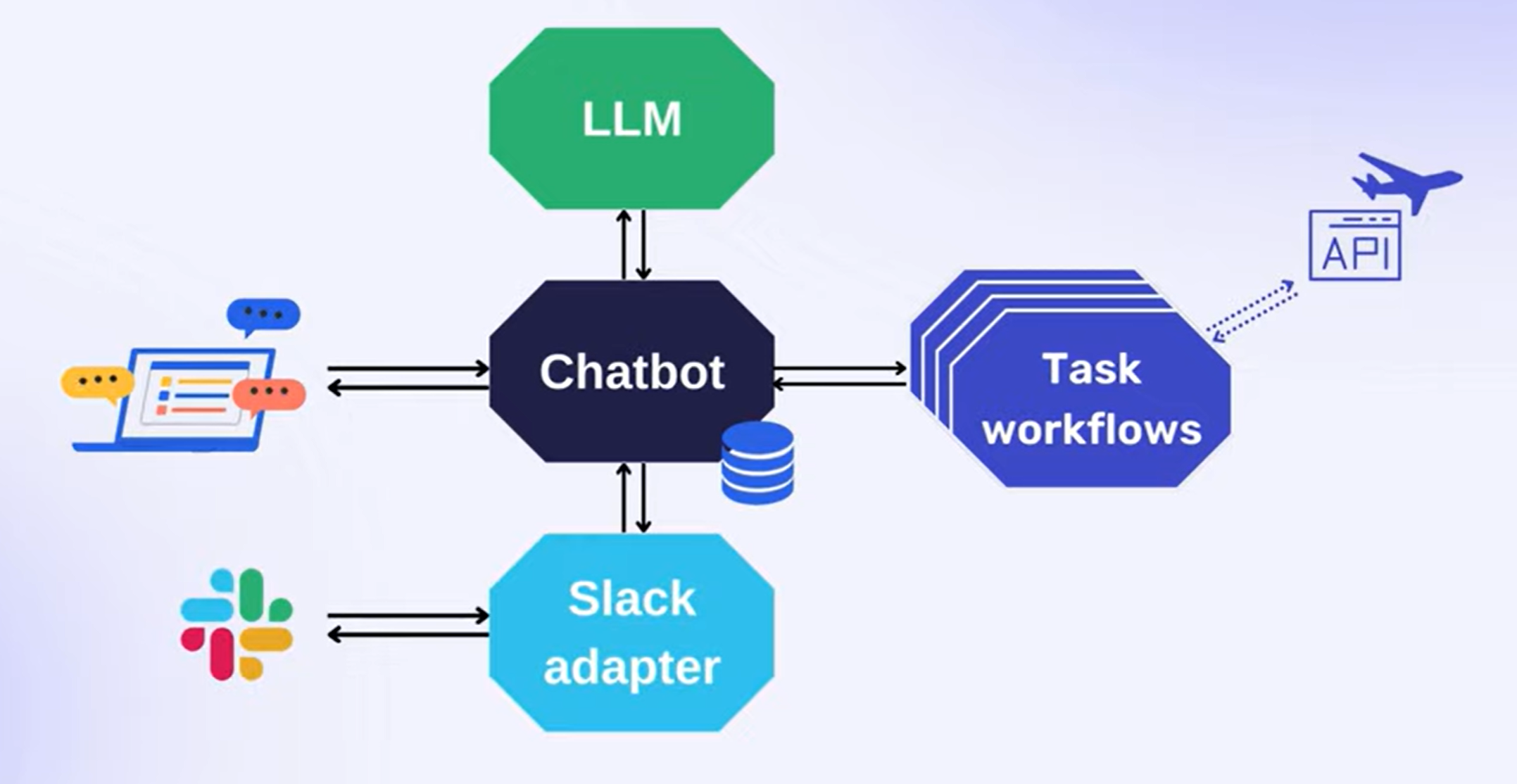

Let's build a chat bot with Restate. The chat bot will answer questions and run certain tasks on our behalf, like setting reminders and watching the price of flight routes. It uses an LLM to process the language and generate actions (starting / cancelling / checking tasks). Here is how this looks when using the bot from slack.

Video: Building an AI Chat and Agent System with Restate

You can find the code in our example repository.

What do we need from our Infrastructure?

Besides the LLM API (here GPT-4o) and the external APIs for specific tasks, what do we need from an infrastructure perspective?

- Storage for the chat histories - one history per session

- Storing the set of ongoing tasks - one set per session

- Ability to spawn async task workflows (check flight APIs every 6 hours)

- Scale-to-zero for the task workflows (they are mostly just pausing until the next step)

- Locking / queuing for chat messages and notifications from async tasks, to ensure a linear history

- Reliability around API calls, to handle transient connection issues

- Reliable async communication between the bot and tasks (decouple tasks, ensure tasks are created reliably once)

For the slack integration:

- Persistently queuing webhooks (notification of new messages) and processing them asynchronously

- State for deduplicating of messages (webhooks may come more than once).

- Posting placeholder messages ("bot is thinking/typing") and keeping track of their timestamps to replace them with the actual answers later.

- Reliably await chat bot response (which might take longer)

For a possible web integration:

- Decoupling the message from the page session, so that hitting refresh/back/forward at the wrong time should not re-trigger any messages and LLM calls

- Idempotency / deduplication for message sending to avoid duplicates

The good news is: Restate helps with all of that 🥳 Let's look at how.

The Chat Bot and Session Management

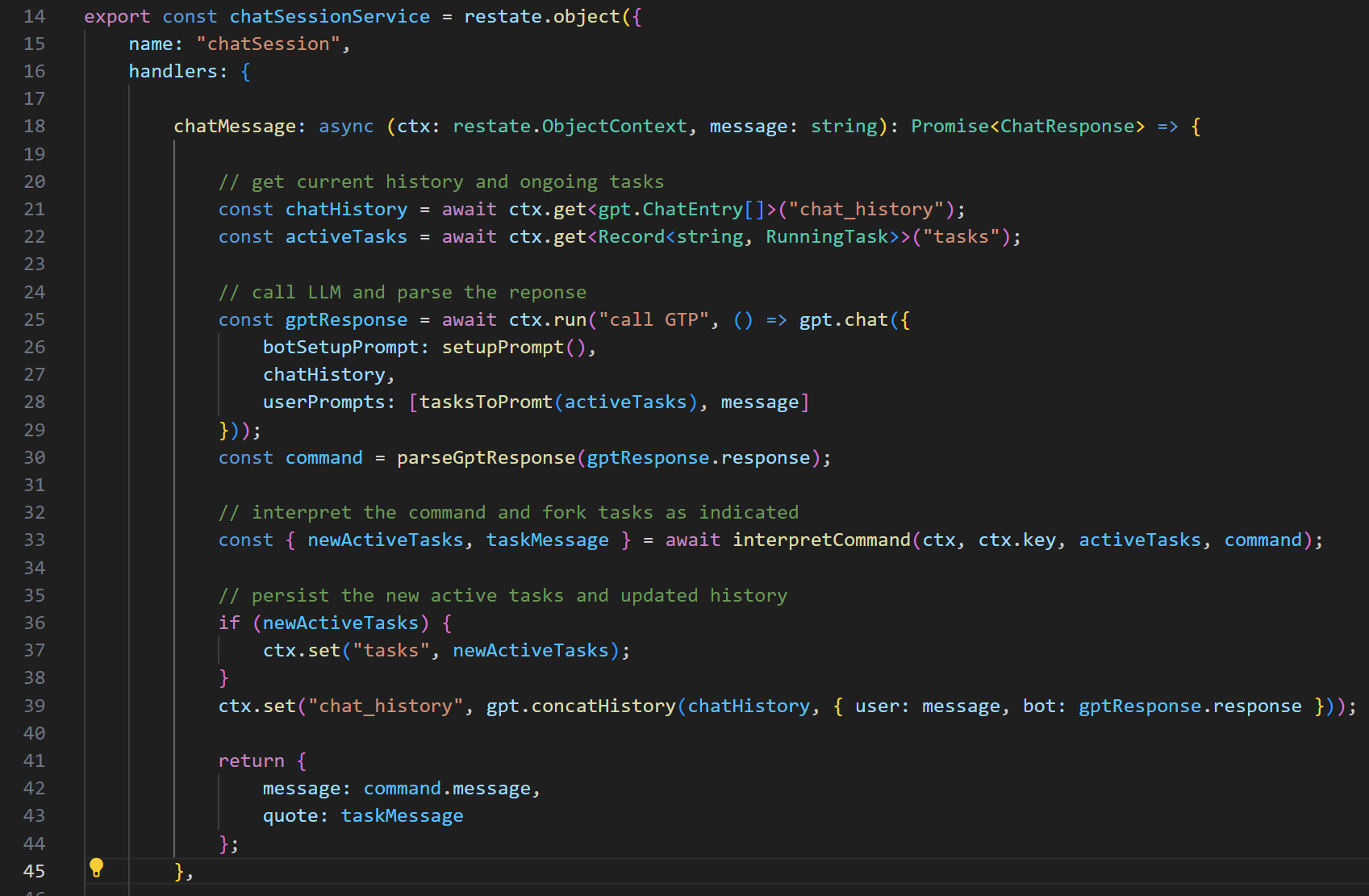

chat.ts has the core chat message logic. Restate's Virtual Objects match the requirements almost too perfectly.

Virtual Objects maintain consistent state per object: Calls to chatSession/maria/chatMessage and chatSession/victoria/chatMessage will automatically have separate isolated views on state.

A single object executes exclusively one method at a time and queues concurrent invocations persistently.

The chat bot code accesses (lines 21-22) and updates (lines 36-39) that state for chat history and ongoing tasks directly without worrying about transactionality, because state is directly attached to the object and exclusively owned by one method invocation at a time.

Each Virtual Object method call is also durably executed (workflow-as-code). That let's our chat bot code simply call the LLM API without implementing retries (lines 25-29), work on command interpretation and spawning tasks (line 33) without worrying about duplicating tasks or missing them.

- Storage for the chat histories - one history per session ✅

- Storing the set of ongoing tasks - one set per session ✅

- Locking / queuing for chat messages and notifications from async tasks, to ensure a linear history ✅

- Reliability around APIs calls, to handle transient connection issues ✅

The Tasks

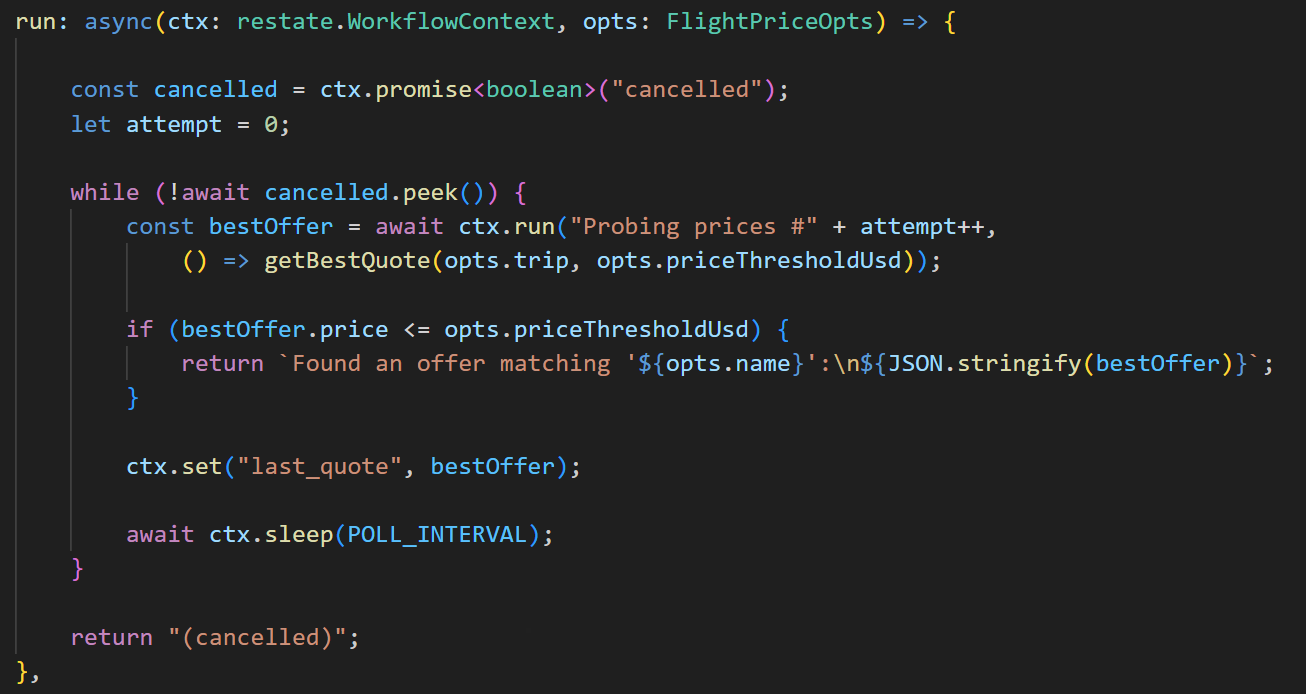

The tasks created by the bot are simple Restate workflows. They are started and queried by exactly-once RPCs like any other service. Let's look at flight_prices.ts as an example.

This workflow just loops until it found a matching price. It uses a durable promise as the cancellation signal (line 31), calls the price API (lines 32-33) and does a durable sleep until the next time to check the API. It stores the last found price in its state (line 39) so the chat bot's command interpreter can access it if needed.

Durable sleeps and promises allow the workflow code to suspend until the sleep elapsed or the promise completed. That makes it cost-efficient to deploy those workflows on FaaS.

- Ability to spaws async task workflows ✅

- Scale-to-zero for the task workflows ✅

- Reliable (exactly once) async communication between bot and tasks ✅

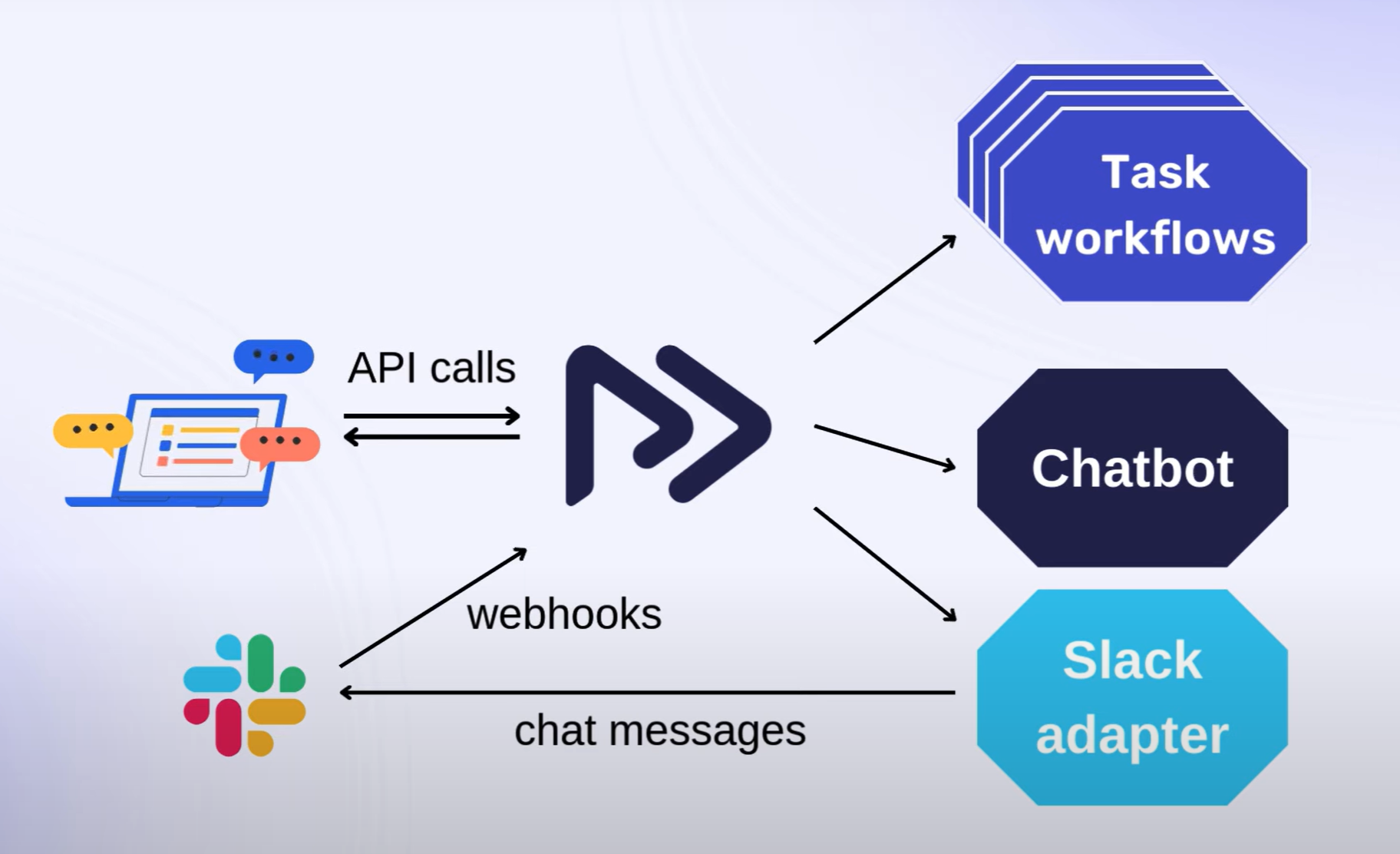

Slack Adapter

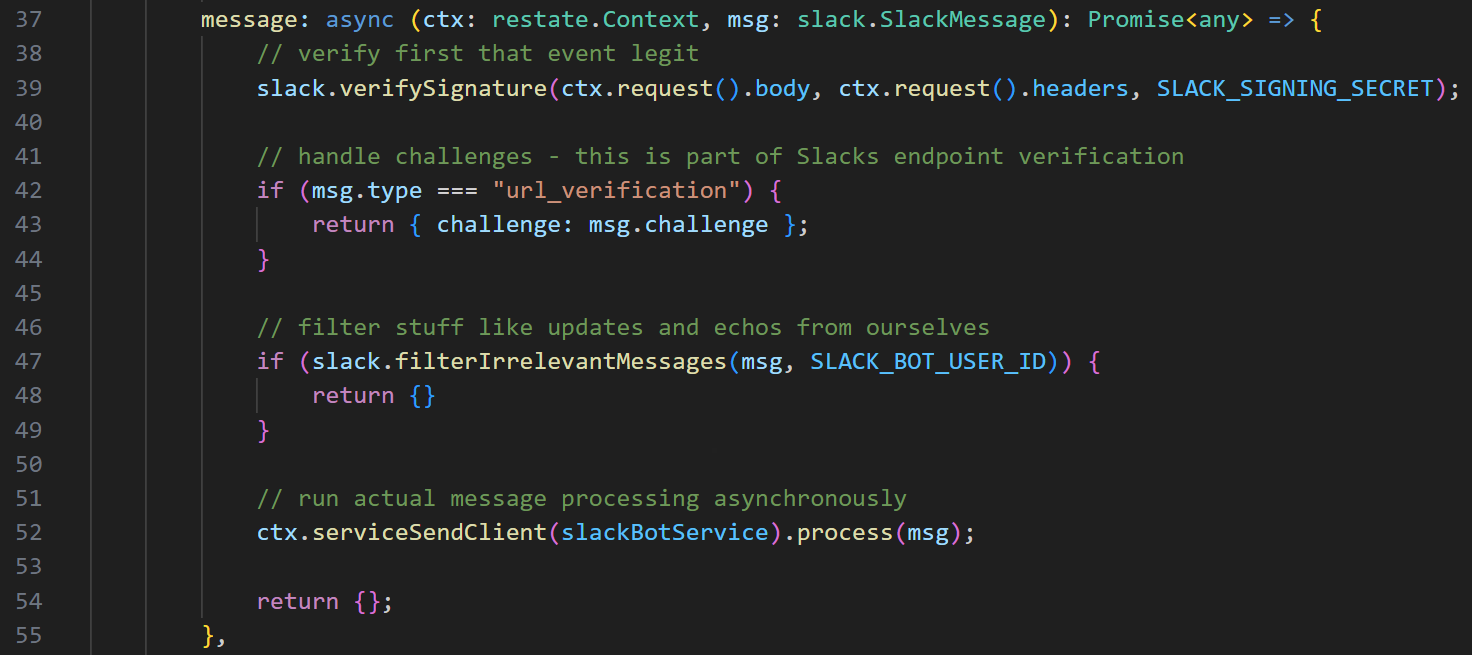

Inside slackbot.ts we manage all Slack-related logic.

The first thing we need to do is persist and acknowledge the webhook that the bot gets

for every slack message. We configured the slack app so that the webhook lands in the message handler.

That handler in turn does the least possible work (verify and filter) before forwarding it to

the process handler and acknowledging the hook, an operation that is persistent in Restate.

- Persistently queuing webhooks and processing them asynchronously ✅

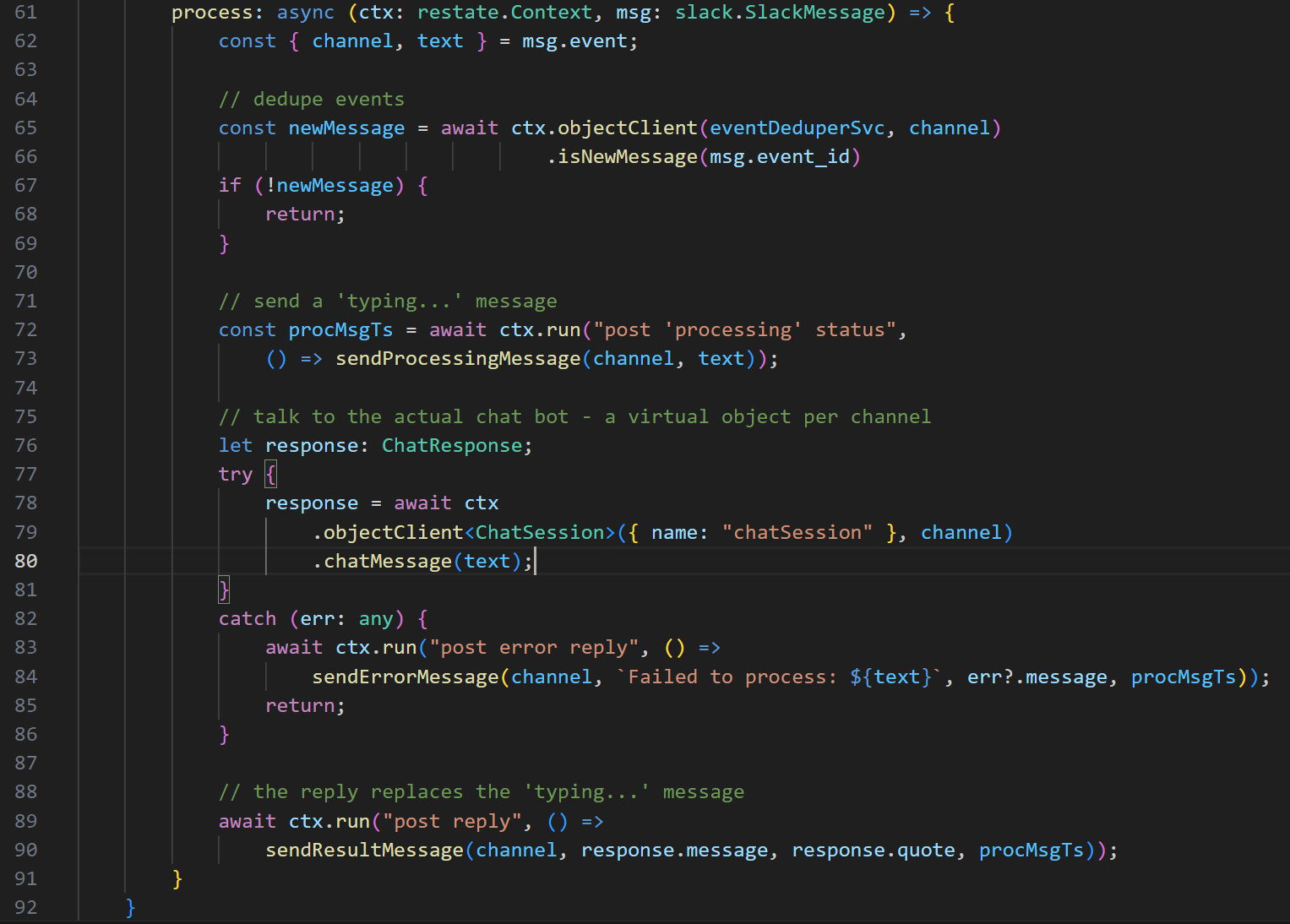

In the process function, all the interesting part happens. We ask an auxiliary

Virtual Object (per slack channel) to track message IDs so we can deduplicate

events (lines 65-69).

We send a placeholder message (a 'typing...' animation) and remember its timestamp (lines 72-73). The slack bot then calls the actual chat bot (lines 78-80), and simply relies on the durable execution and exactly-once messaging sematics making this interaction automatically robust. If the chatbot takes long to respond, the slackbot can also suspend and resume once the response is there.

Finally, the bot uses previously remembered timestamp to replace the typing... message with the chat bot's answer (lines 89-90).

- State for deduplicating of messages ✅

- Placeholder messages, id (timestamp) tracking, replace them with the actual answers later ✅

- Reliably await chatbot response ✅

For a possible Web UI integration

Every request through Restate is persistent, asynchronous, and supports idempotency keys. When sending a message from a web page to Restate, the HTTP request can add an idempotency key (possibly persisted browser-side in site storage).

When making a repeated call with the same parameter (chat message) and idempotency token, Restate attaches that request to the ongoing invocation, re-connecting the calls.

- Hitting refresh/back/forward at should not re-trigger messages or LLM calls ✅

- Avoiding message duplicates ✅

That's a wrap!

We hope you enjoyed this example and how much can be handled by a system like Restate, through its compute (durable execution), state (virtual objects) and communication (durable rpc and futures/promises) building blocks.

Let us know your thoughts on Twitter, Discord, or Slack and give Restate a try!